Abstract

We study personalized decision modeling in settings where individual choices deviate from population-optimal predictions. We introduce ATHENA—an Adaptive Textual‑symbolic Human‑centric Reasoning framework—built in two stages: (1) a group‑level symbolic utility discovery procedure (LLM‑augmented symbolic search) learns compact, interpretable utility forms; and (2) an individual‑level semantic adaptation step refines a personal template with TextGrad to capture each person’s preferences and constraints. On Swissmetro (travel mode choice) and COVID‑19 vaccine uptake tasks, ATHENA consistently outperforms utility‑based, ML, and LLM baselines, improving F1 by ≥ 6.5% over the strongest alternatives while maintaining calibrated probabilistic predictions. Together, these stages bridge classic Random Utility Maximization with textual semantic context, yielding interpretable structure and personalized reasoning.

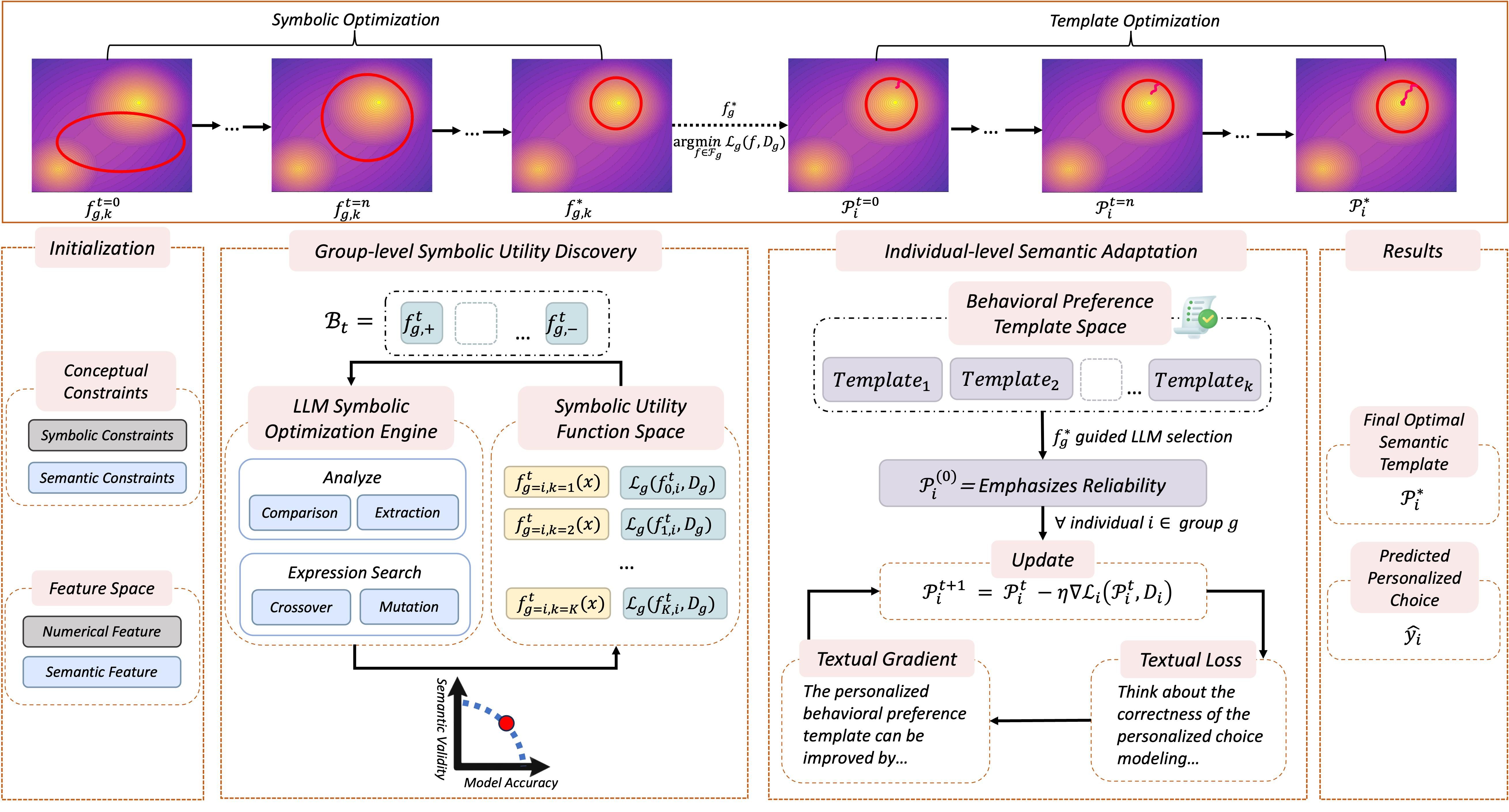

Method at a Glance

Group-level Symbolic Utility Discovery samples candidate symbolic utility functions from the LLM-conditioned distribution , guided by the concept library , symbolic library , and prior feedback .

Individual-level Semantic Adaptation refines each person’s through iterative updates. This process personalizes the template based on individual data and semantic gradients, capturing heterogeneous preferences and contextual constraints.

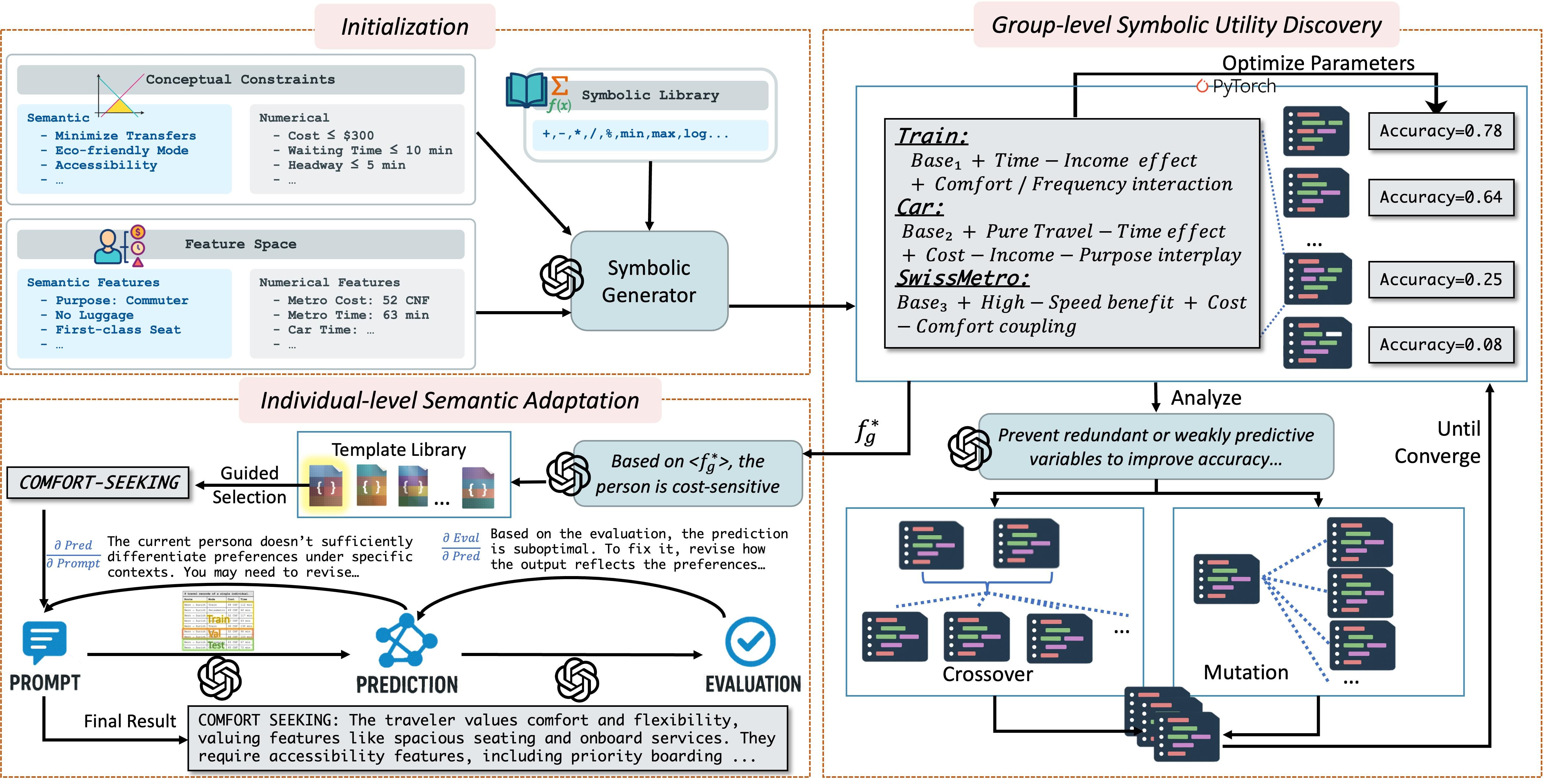

Figure 1: Overview of ATHENA framework. The Group-level symbolic utility discovery stage uses an LLM-driven symbolic optimization to find compact utilities . The Individual-level semantic adaptation stage refines personalized templates via TextGrad to model individual decision rules.

Two‑Stage Pipeline

-

Group‑Level Symbolic Utility Discovery LLMs sample candidate symbolic utility functions (Eq. 3) from a structured symbolic–semantic space, guided by a concept library and feedback . Through iterative evaluation and mutation/crossover, the model converges to the optimal symbolic utility that best captures group‑level decision regularities.

-

Individual‑Level Semantic Adaptation Using the discovered as a strong prior, each individual’s textual semantic template is optimized by TextGrad (Eq. 7) to incorporate heterogeneous personal preferences and constraints. The adaptation continues until the template converges to .

Personalized Decision Inference integrates the learned symbolic utility with the adapted semantic template to generate individualized predictions that reflect both group‑level reasoning and personal context.

Figure 2: ATHENA pipeline applied to travel–mode choice. Using Swissmetro as an example, the Initialization encodes constraints and symbolic features. In Group-level optimization, LLMs sample and prune utility formulas . In Individual adaptation, each guides a personalized prompt to capture heterogeneity.

Algorithm 1 · ATHENA Optimization Flow

Require Demographic group , dataset , domain concept , symbolic building block

- Initialize

- // Stage 1: Group-Level Symbolic Utility Discovery

- fordo

Sample symbolic utility functions

Update using Eq.

Select best function

ifstopping condition in Eq.then break - // Stage 2: Individual-Level Semantic Adaptation

- for eachindividual

Initialize semantic template

fordoUpdate using Eq.

return , predict decisions using Eq.

Results

Overall Performance

| Method | LLM Model | Swissmetro | Vaccine | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc. ↑ | F1. ↑ | CE ↓ | AUC ↑ | Acc. ↑ | F1. ↑ | CE ↓ | AUC ↑ | |||

| LLM- Based | Zeroshot | gemini-2.0-flash | 0.5920 | 0.2940 | 0.9257 | 0.6561 | 0.5800 | 0.5092 | 0.8328 | 0.7607 |

| GPT-4o-mini | 0.6300 | 0.2757 | 2.7258 | 0.3657 | 0.5433 | 0.5387 | 0.8562 | 0.7395 | ||

| Zeroshot-CoT | gemini-2.0-flash | 0.5880 | 0.3478 | 0.9415 | 0.6331 | 0.5800 | 0.5073 | 0.8436 | 0.7526 | |

| GPT-4o-mini | 0.6420 | 0.2960 | 0.8957 | 0.6237 | 0.5500 | 0.5353 | 0.8540 | 0.7465 | ||

| Fewshot | gemini-2.0-flash | 0.7580 | 0.7027 | 8.7244 | 0.7956 | 0.5667 | 0.5740 | 12.0324 | 0.7053 | |

| GPT-4o-mini | 0.6815 | 0.4945 | 7.0029 | 0.7395 | 0.5067 | 0.5097 | 6.6110 | 0.6891 | ||

| TextGrad | gemini-2.0-flash | 0.5568 | 0.2980 | 1.2011 | 0.5400 | 0.4241 | 0.4014 | 5.7813 | 0.6363 | |

| GPT-4o-mini | 0.6500 | 0.3620 | 0.9079 | 0.5364 | 0.5084 | 0.4962 | 4.5412 | 0.6709 | ||

| ATHENA | gemini-2.0-flash | 0.7679 | 0.7222 | 0.9041 | 0.8387 | 0.6797 | 0.5968 | 0.7610 | 0.8370 | |

| GPT-4o-mini | 0.8134 | 0.7655 | 1.0863 | 0.8825 | 0.7345 | 0.7161 | 0.7551 | 0.8704 | ||

| Utility Theory | MNL | / | 0.6101 | 0.3887 | 0.8353 | 0.7074 | 0.4150 | 0.1955 | 1.0510 | 0.4301 |

| CLogit | / | 0.5714 | 0.2424 | 0.8916 | 0.5976 | 0.4150 | 0.1955 | 1.0510 | 0.5000 | |

| Latent Class MNL | / | 0.6101 | 0.3967 | 0.8175 | 0.7182 | 0.1950 | 0.1088 | 1.0986 | 0.5000 | |

| Machine Learning | Logistic Regression | / | 0.5620 | 0.5570 | 0.9310 | 0.7460 | 0.6500 | 0.6690 | 0.7630 | 0.8330 |

| Random Forest | / | 0.7100 | 0.7050 | 0.7380 | 0.8810 | 0.6300 | 0.6470 | 0.7290 | 0.8420 | |

| XGBoost | / | 0.7080 | 0.7050 | 0.7040 | 0.8810 | 0.6300 | 0.6480 | 1.1420 | 0.8150 | |

| BERT | / | 0.7246 | 0.4994 | 0.7037 | 0.8811 | 0.6350 | 0.6541 | 0.7409 | 0.8168 | |

| TabNet | / | 0.6375 | 0.4060 | 0.7887 | 0.8810 | 0.6650 | 0.6684 | 0.8968 | 0.8147 | |

| MLP | / | 0.6475 | 0.6386 | 0.7626 | 0.8350 | 0.6068 | 0.6062 | 0.9320 | 0.8205 | |

BibTeX

@inproceedings{zhao2025athena, title = {Personalized Decision Modeling: Utility Optimization or Textualized-Symbolic Reasoning}, author = {Yibo Zhao, Yang Zhao, Hongru Du, Hao Frank Yang}, booktitle = {The Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS)}, year = {2025}}